DIGITIMES ASIA: AI workload surge creates opening for alternative memory and chiplet technologies, expert says

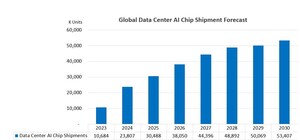

TAIPEI, Dec. 1, 2025 /PRNewswire/ -- The rapid expansion of artificial intelligence (AI) workloads is forcing a fundamental redesign of data center infrastructure, driving historic memory shortages and opening a rare market window for alternative memory technologies, according to Chuck Sobey, founder of ChannelScience and general chair of the Chiplet Summit.

Speaking on the industry's shift, Sobey highlighted that AI workloads differ structurally from traditional enterprise tasks. While legacy workloads stress the CPU, network, and storage—often leaving processors waiting for I/O—modern AI tasks, such as deep learning training, large language model (LLM) inference, and retrieval-augmented generation (RAG), are critically bound by memory bandwidth.

The bandwidth gap and power crisis

The demands of the AI era are unprecedented. Sobey noted that AI systems can require up to 10 terabytes per second (TB/s) of GPU memory access—roughly 50 to 200 times the bandwidth of standard DDR5. Unlike web and cloud requests that deal in kilobytes, AI accesses involve gigabyte-sized tensors, necessitating algorithms that exercise the entire memory storage hierarchy.

This architectural strain has profound economic consequences. Sobey described the modern AI data center as a "token factory," where revenue is derived strictly from processing tokens. Currently, these facilities are power-limited and memory-limited rather than compute-limited. Power density requirements are skyrocketing, forcing rack redesigns to support up to one megawatt—exceeding previous standards by 10 to 100 times.

Supply chains are already buckling under this pressure. Sobey pointed to a historic shortage in the memory sector, specifically for high-performance server memory, with reports of a 70% fulfillment rate for orders and price surges of up to 50%. Securing necessary memory inventory is becoming increasingly difficult for all but the largest hyperscalers.

Chiplets: the gateway for MRAM and RRAM

Sobey identified this supply/power crunch as a strategic entry point for "alternative memories"—technologies like MRAM (magnetoresistive RAM), RRAM (resistive RAM), and phase change memory (PCM) that lack the dedicated, multi-billion-dollar fabs of incumbents.

The chiplet architecture is the critical enabler for this transition. By disaggregating functions from monolithic ASICs into smaller units, chiplets allow designers to integrate esoteric materials required for alternative memory without contaminating high-end logic fabrication processes. This heterogeneous integration allows for specific performance advantages, such as radiation tolerance or high-temperature resilience, which Sobey argues are necessary differentiators beyond simple cost claims.

The new "five-second rule"

The velocity of AI is also compressing economic rules of thumb. Sobey observed that the long-standing "five-minute rule"—which dictates data should be kept in DRAM if accessed within five minutes—has accelerated to a "five-second rule." In the AI era, if data is not accessed within five seconds, the cost-benefit analysis dictates it must be ejected from expensive, fast memory tiers.

Sobey said the current surge in AI is no mere ripple but a gathering wave, arguing that suppliers need to secure their positions now if they hope to retain an edge when pricing and supply chains eventually settle.

Sobey will discuss the impact of chiplets on the memory-storage hierarchy further during the DIGITIMES webinar on December 5, 2025, and at the Chiplet Summit in Santa Clara, California, scheduled for February 17–19, 2026.

SOURCE DIGITIMES ASIA

Share this article